Memory Bandwidth for Data Science: Why 672GB/s Matters

Is your model training lagging? 📉 Discover why memory bandwidth for data science is critical. We explain how 672GB/s throughput accelerates machine learning and eliminates bottlenecks for faster insights. 🚀

Staring at a progress bar while your AI model trains is a special kind of frustration. You've got the cores, you've got the algorithm... so what's the holdup? Often, the culprit is a hidden bottleneck: memory bandwidth. For South African data scientists and AI developers, understanding this single spec is the key to unlocking true performance. A figure like 672GB/s isn't just marketing fluff; it's the difference between a breakthrough and a backlog. 🚀

MSI GeForce RTX 5080 16G VENTUS 3X OC Plus Graphics Card / PCI Express Gen 5 / 10752 Cuda Cores / 16GB GDDR7 / Nickel-plated Copper Baseplate / 912-V531-293

GeForce RTX™ 5070 Ti 16G SHADOW 3X OC Graphics Card / 8960 Cuda Cores / 256-bit Memory Interface / Boost Clock : 2482 MHz / NVIDIA Blackwell & DLSS 4 / TORX Fan 5.0 / Nickel-Plated Copper Baseplate / 912-V531-465

Palit GeForce RTX 5070 Ti GameRock 16G / 16GB GDDR7 / 28Gbps Memory Speed / PCI Express® Gen 5 / NE7507T019T2-GB2030G

MSI GeForce RTX 5070 Ti 16G Gaming Trio OC Graphics Card / 16GB GDDR7 / 8960 Cuda Cores / 256-bit Memory Interface / Boost Clock : 2572 MHz / PCI Express® Gen 5 / 912-V531-272

MSI GeForce GT 710 2GD3H LP 2GB DDR3 Graphics Card / 192 CUDA CORES / 64-bit Memory / 1x HDMI / 1x Dual-link DVI-D / 1x D-Sub / 912-V809-4217

MSI GeForce RTX 5060 Ti 8G VENTUS 2X OC Plus Graphics Card / 4608 Cuda Cores / 8GB GDDR7 / 128-Bit Memory Bus / 2602MHz Boost Core Clocks / 912-V536-024

What is Memory Bandwidth in Data Science?

Think of your GPU as a high-tech factory. The CUDA cores are the workers on the assembly line, and the data is the raw material. Memory bandwidth for data science is the speed and width of the conveyor belt that feeds those workers. If the belt is too slow or too narrow, your workers stand around waiting, and production grinds to a halt. This is exactly what happens when a powerful GPU is starved for data.

In technical terms, it’s the rate at which data can be read from or stored into a GPU's memory (VRAM) by the processor. For tasks involving massive datasets, like training a neural network, this speed is paramount. You need a GPU that can keep its cores fed, and that's why high-end NVIDIA GeForce graphics cards are so popular in this field.

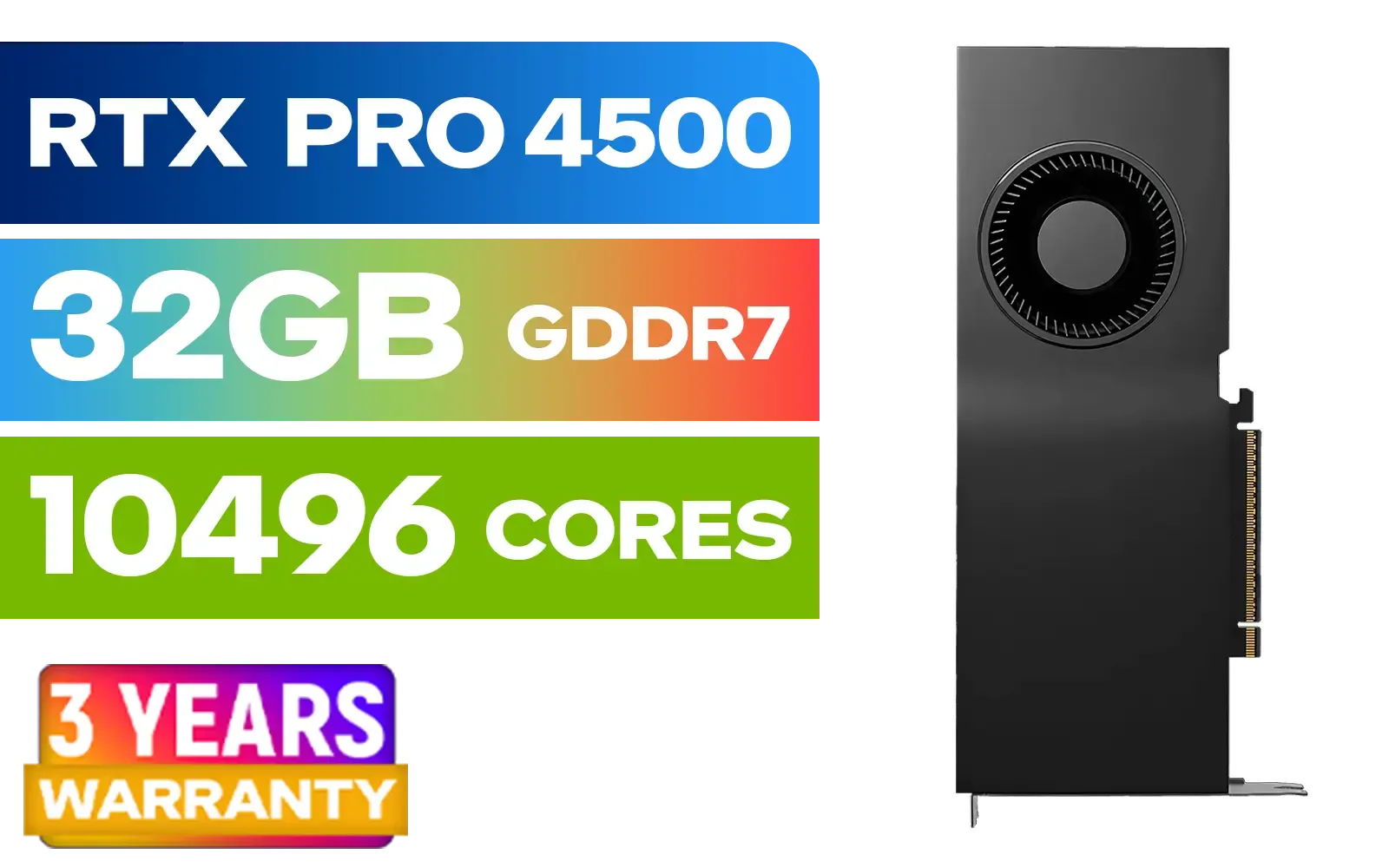

PNY NVIDIA RTX PRO 4500 32GB Blackwell Workstation Graphics Card / 10,496 CUDA Cores / 32GB GDDR7 with ECC / 4x Display Ports / VCNRTXPRO4500-PB

MSI GeForce RTX 5080 16G VENTUS 3X OC Plus Graphics Card / PCI Express Gen 5 / 10752 Cuda Cores / 16GB GDDR7 / Nickel-plated Copper Baseplate / 912-V531-293

Palit GeForce RTX 5060 Infinity 2 OC 8GB GDDR7 / 28Gbps Memory Speed / PCI Express® Gen 5 / NE75060V19P1-GB2063L

PNY GeForce RTX 5060 Ti 16GB OC Dual Fan Graphics Card / 16GB GDDR7 / 4608 CUDA Cores / 128-bit Memory Interface / Boost Clock: 2692 MHz / 28 Gbps Memory Speed / DisplayPort 2.1b (x3), HDMI® 2.1b / VCG5060T16DFXPB1-O

MSI GeForce RTX 5070 12G Inspire 3X OC Graphics Card / 12GB GDDR7 / 6144 Cuda Cores / 192-bit Memory Interface / Boost Clock : 2542 MHz / NVIDIA Blackwell & DLSS 4 / 28Gbps Memory Speed / PCI Express® Gen 5 / 912-V532-017

MSI Geforce RTX 5070 Ventus 2X 12G OC Graphics Card / 12GB GDDR7 / 6144 Cuda Cores / 192-bit Memory Interface / Boost Clock : 2542 MHz / NVIDIA Blackwell & DLSS 4 / 28Gbps Memory Speed / PCI Express® Gen 5 / 912-V532-009

Why High Bandwidth is Crucial for AI and Machine Learning

When you're training a large language model or running complex simulations, your system is constantly shuffling enormous chunks of data. High GPU bandwidth for AI ensures this happens with minimal delay, directly translating to faster training times and quicker iterations.

A card like the GeForce RTX 4070 Ti SUPER, which boasts a memory bandwidth of 672GB/s, can process data at a blistering pace. This allows it to handle larger, more complex models without choking. For professionals whose time is money, spending less time waiting for a model to compile means more time for analysis and discovery. For the most demanding enterprise-level tasks, dedicated professional workstation graphics cards take this principle even further. ✨

Spotting a Bottleneck 🔧

Use a tool like NVIDIA's nvidia-smi command in your terminal or MSI Afterburner to monitor your GPU. If you see high core utilisation but the "Memory Controller Load" is consistently maxed out at 100%, you've likely found a memory bandwidth bottleneck. It's a clear sign your GPU cores are waiting for data.

Decoding the Numbers: 672GB/s in a South African Context

So, what does 672 gigabytes per second actually feel like? It’s a speed that's hard to conceptualise. It's fast enough to load the entire digital library of a small university in a single second. For a data scientist, this means the parameters, weights, and datasets for your model are loaded and accessed almost instantaneously.

This level of performance isn't exclusive to one brand or model, but it's a key differentiator in the high-end market. When comparing GPUs, don't just look at the core count or clock speed. Dig into the specs for the memory bus width and memory speed—these two factors determine the final bandwidth. Whether you're considering MSI's latest offerings or weighing up reliable options from Palit, calculating the memory bandwidth gives you a clearer picture of its real-world data-crunching power.

Palit GeForce RTX 5080 GamingPro 16GB GDDR7 / 30Gbps Memory Speed / PCI Express® Gen 5 / NE75080019T2-GB2031A

MSI GeForce RTX 5070 12G Inspire 3X OC Graphics Card / 12GB GDDR7 / 6144 Cuda Cores / 192-bit Memory Interface / Boost Clock : 2542 MHz / NVIDIA Blackwell & DLSS 4 / 28Gbps Memory Speed / PCI Express® Gen 5 / 912-V532-017

MSI GeForce RTX 5070 12GB SHADOW 3X OC / PCI Express Gen 5 / 6144 Cuda Cores / 12GB GDDR7 / DirectX 12 Ultimate / 912-V532-008

MSI GeForce RTX 5060 8G Gaming Trio OC Graphics Card / 8GB GDDR7 / 3840 Cuda Cores / 128-bit Memory Interface / Boost Clock : 2625 MHz / PCI Express® Gen 5 / 912-V537-021

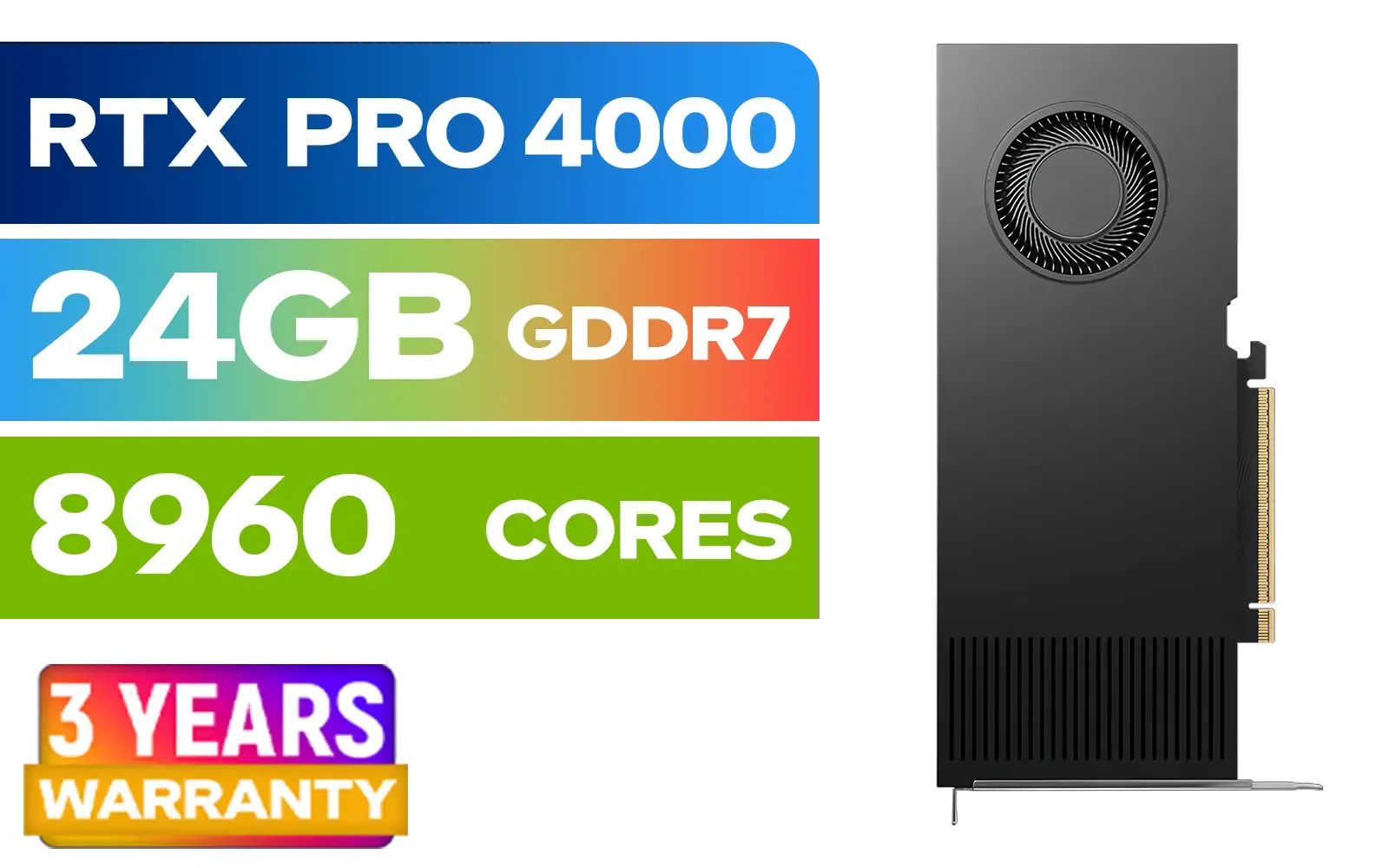

PNY RTX PRO 4000 Blackwell 24GB Graphics Card / 8960 CUDA CORES / 24GB GDDR7 with ECC / 192-bit Memory / VCNRTXPRO4000-PB

MSI GeForce RTX 5060 Ti 8G VENTUS 2X OC Plus Graphics Card / 4608 Cuda Cores / 8GB GDDR7 / 128-Bit Memory Bus / 2602MHz Boost Core Clocks / 912-V536-024

Future-Proofing Your Data Science Rig

The world of AI is moving incredibly fast. Models are becoming exponentially larger, and datasets are growing more complex. The high-end memory bandwidth for data science today will be the standard for mid-range systems tomorrow. Investing in a GPU with significant bandwidth headroom is one of the smartest ways to future-proof your workstation.

This means keeping an eye on the horizon. Planning for the performance leap expected from the upcoming RTX 5070 series can inform your buying decision today. Even next-generation cards like the anticipated RTX 5060 will likely push bandwidth standards higher, making now a great time to get ahead of the curve. Your goal should be to build a machine that won't just run today's models, but will also be ready for the challenges of tomorrow. ⚡

Ready to Unshackle Your Data? Don't let a hidden bottleneck slow down your next big discovery. Upgrading your GPU is the single biggest leap you can make in data science performance. Explore our range of workstation and AI-ready graphics cards and build the machine your projects deserve.

High bandwidth allows the processor to access large datasets faster, significantly reducing the time required for training complex machine learning models.

This level of throughput ensures that high-end CPUs or GPUs are not starved of data, allowing for real-time processing of massive neural network parameters.

Yes, slow memory creates a bottleneck where the GPU waits for data. Faster memory bandwidth for data science directly translates to shorter epoch times.

Both are vital, but bandwidth often limits performance in large language models (LLMs) where data movement speed is the primary constraint.

This speed is typically found in high-end workstations using 8-channel DDR5 configurations or specialized GPU memory architectures designed for HPC.

You can use benchmarking tools like AIDA64 or stream benchmarks to measure your system's actual read/write throughput and latency.