RAM for Running LLMs Locally: Optimizing Your Setup

🚀 Discover how RAM impacts local LLM performance. Learn to allocate memory, boost AI speed, and avoid bottlenecks for seamless model operation.

So, you want to dive into the world of local AI and run Large Language Models (LLMs) on your own machine? That’s brilliant. It's the new frontier for tech enthusiasts in South Africa. But while everyone talks about needing a beastly GPU, there's a silent partner in this operation that's just as crucial: your system RAM. Getting the right amount and type of RAM for running LLMs locally can be the difference between smooth sailing and a frustrating, laggy mess.

Why System RAM Matters (It's Not Just VRAM)

Think of your GPU's VRAM as a specialist's workbench—it holds the active part of the model for processing. But your system RAM is the entire workshop. It's where the model is first unpacked, where your data gets prepared (pre-processed), and where your operating system juggles everything in the background. If this workshop is too small, everything grinds to a halt, no matter how skilled the specialist is. Optimizing your setup means ensuring this whole workflow is balanced.

Corsair Vengeance RGB DDR5 64GB (4x16GB) 6000MHz C36 Intel Optimized Desktop Memory (Dynamic Ten-Zone RGB Lighting, Onboard Voltage Regulation, Custom XMP 3.0 Profiles, Tight Response Times) Black / CMH32GX5M2E6000C36x2

Teamgroup T-Force DELTA RGB 32GB DDR5 Desktop Memory - Black / 32GB (2x16GB) / 7200MHz / CL34 / FF3D532G7200HC34ADC01

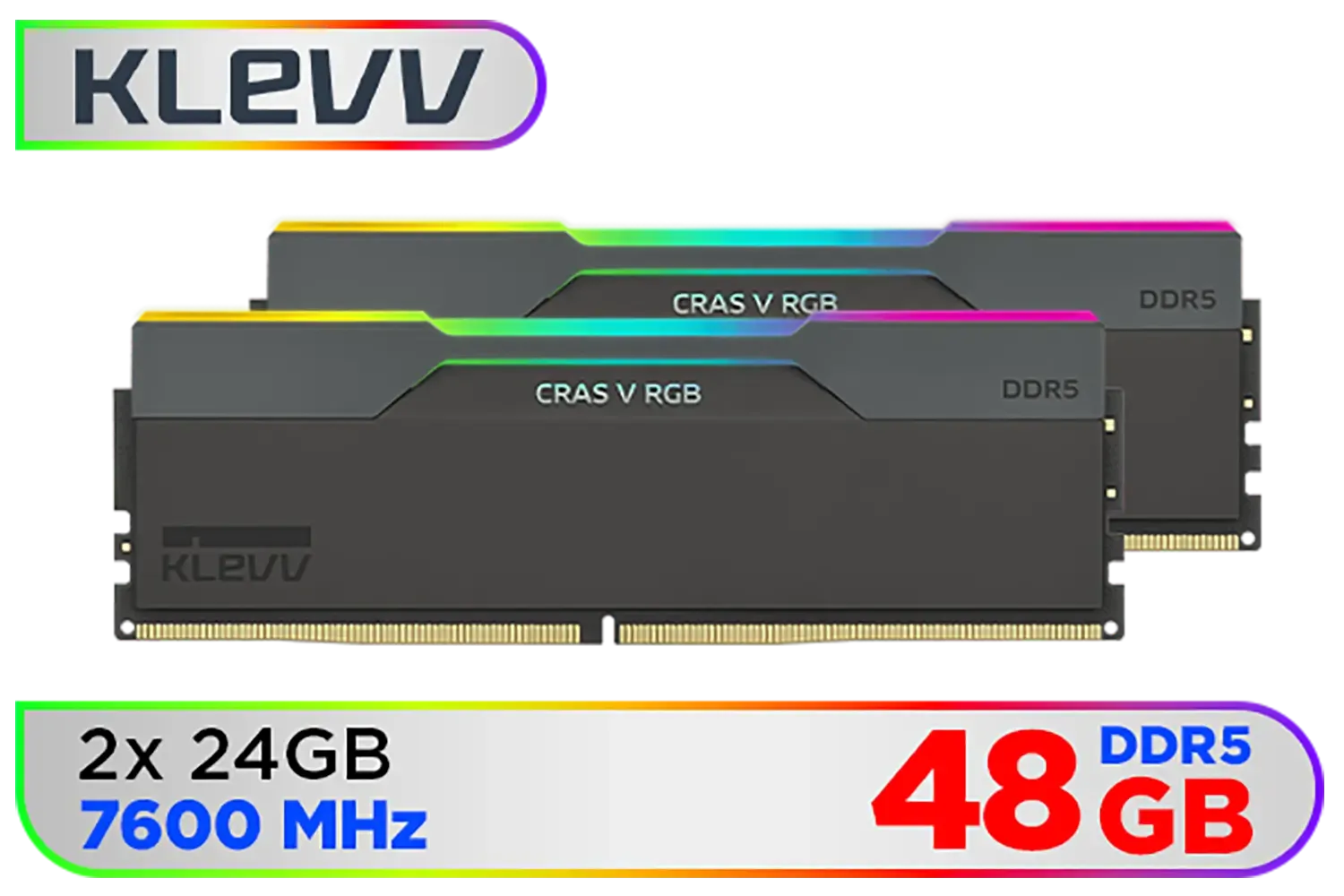

KLEVV CRAS V RGB 32GB Kit (16GB x2) 7200MHz Gaming Memory DDR5 RAM XMP 3.0/AMD EXPO High Performance Overclocking / KD5AGUA80-72B340G

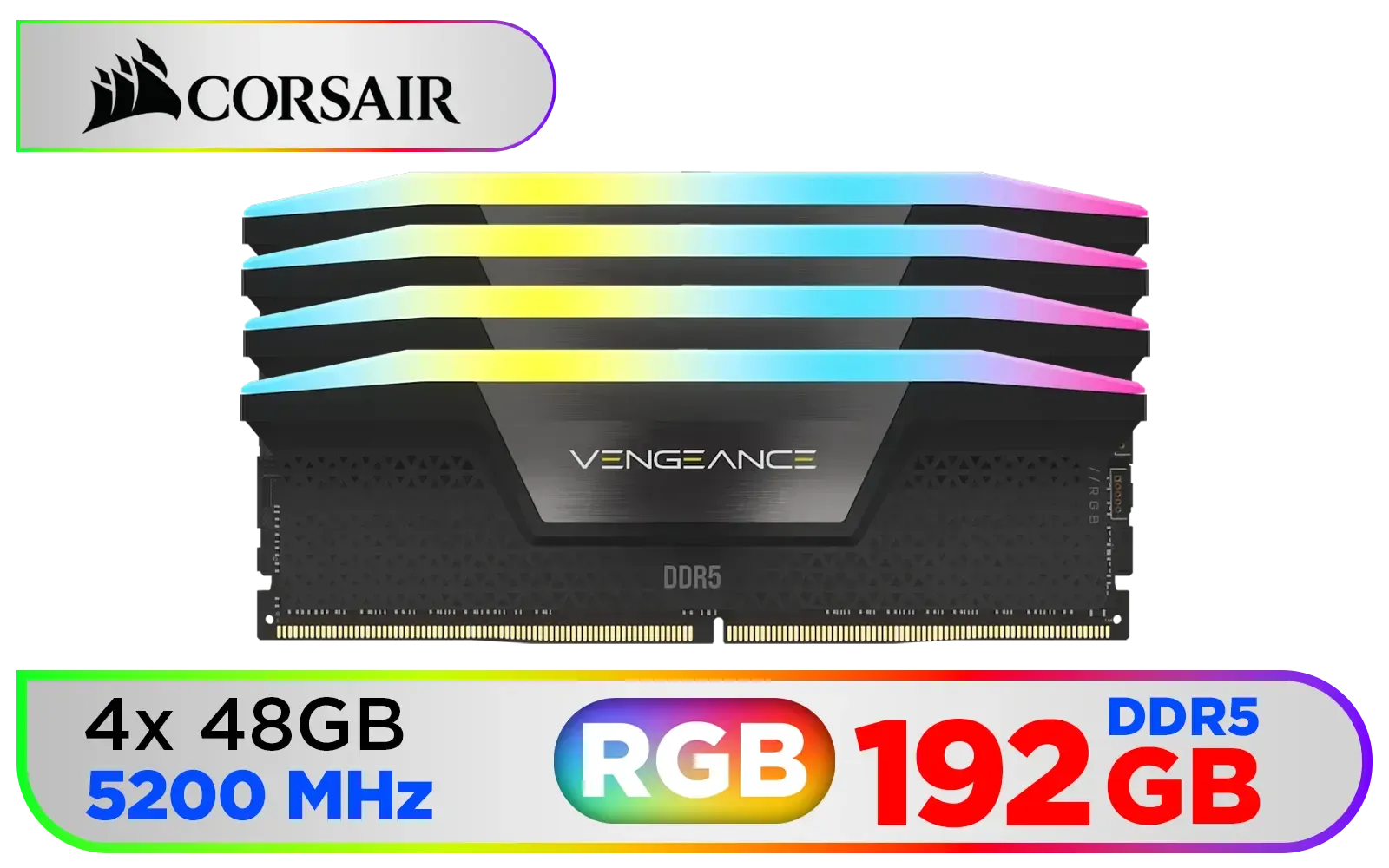

Corsair Vengeance RGB DDR5 192GB (4x48GB) DDR5 5200MHz CL38 Memory – Black / Ten-zone panoramic RGB lighting / Custom Intel XMP 3.0 profiles / Onboard voltage regulation / CMH192GX5M4B5200C38

ADATA XPG Lancer RGB 48GB (2 x 24GB) DDR5 DRAM 7200MHz Desktop Memory - Black / High-Quality Materials / Customizable RGB light effects / Supports Intel® XMP 3.0 / AX5U7200C3424G-DCLARBK

Corsair Vengeance RGB DDR5 96GB (2x48GB) DDR5 5600MHz CL40 Memory – Black / Ten-zone panoramic RGB lighting / Custom Intel XMP 3.0 profiles / Onboard voltage regulation / CMH96GX5M2B5600C40

Finding Your RAM Sweet Spot for Local LLMs

You don’t need to break the bank to get started. The key is to match your RAM to your ambition and budget.

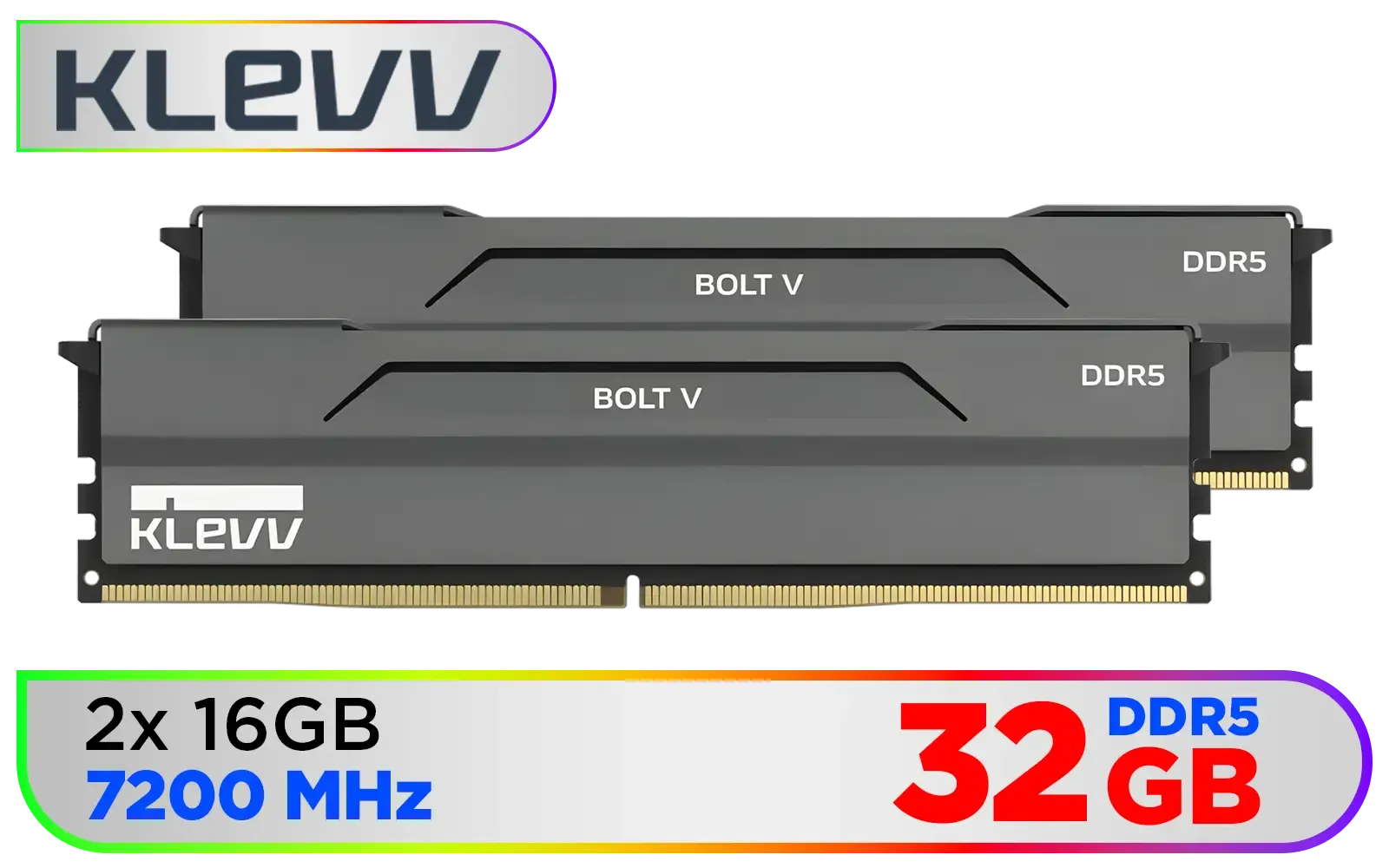

The Budget-Friendly Start: 32GB

For anyone just starting out or experimenting with smaller, optimised models (like 7B parameter models), 32GB of system RAM is a fantastic and affordable starting point. It gives you enough breathing room to load models and handle data without constant slowdowns. This makes high-quality DDR4 memory an incredible value-for-money choice in South Africa, letting you build a capable machine on a tight budget. ✨

The Serious Hobbyist's Choice: 64GB+

Are you planning to run larger models, fine-tune them with your own data, or multitask heavily while the AI works its magic? This is where 64GB or more becomes essential. Stepping up to a modern platform with high-speed DDR5 memory is the best way forward. The increased bandwidth of DDR5 significantly speeds up data loading and pre-processing, which are critical parts of the RAM for running LLMs locally. This is the path for future-proofing your setup. 🚀

The Virtual Memory Lifeline

If you're in a pinch and your physical RAM is maxed out, you can increase your virtual memory (page file). Set it to a large, fixed size on your fastest SSD. It's not a replacement for real RAM and will be much slower, but it can be the difference between a process completing or crashing.

Using What You've Got: The Old School Approach

Got an old PC gathering dust? While not ideal for heavy lifting, a machine with older DDR3 gaming RAM modules can still be a zero-cost entry point. You won't be running massive models, but you can absolutely use it to install the software, learn the command line tools, and understand the workflow. Don't let perfect be the enemy of good when you're just starting to learn.

Optimizing your setup is about making smart choices. Start with what you can afford, understand the bottlenecks, and upgrade where it counts most. For local LLMs, a healthy amount of system RAM is a truly powerful investment.

Future-Proof Your AI Rig Ready to stop the stuttering and give your AI projects the speed they deserve? Shop our range of high-speed DDR5 memory at Evetech and build a machine for the future.

8GB–64GB DDR4/DDR5 recommended. Depends on model size like Llama 3 or GPT-J.

Faster memory reduces latency. Opt for 3200MHz+ for real-time AI responses.

Yes! Low RAM causes OOM errors during model loading or batch processing.

Use memory-efficient frameworks like llama.cpp or quantized models to reduce overhead.

ECC helps prevent data corruption in large-scale training, but not critical for basic local use.

Lower latency improves token generation speed, especially with large context windows.

Use system tools or MLPerf benchmarks to track memory consumption in real time.

No. Swap memory slows inference. Adequate physical RAM ensures smooth LLM operation.